What is TAO Bittensor?

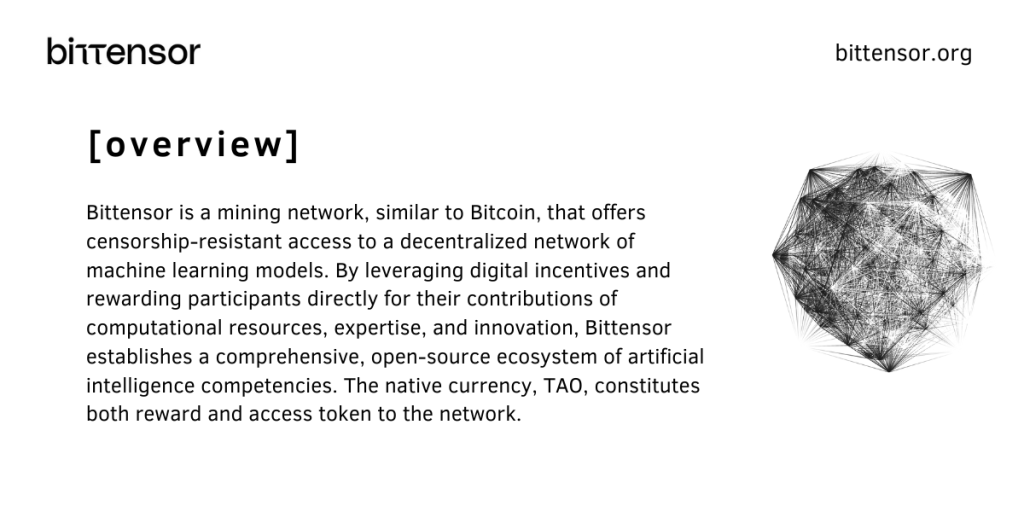

Bittensor is revolutionizing the development of machine learning platforms by decentralizing the process and creating a peer-to-peer market for machine intelligence. It enables the collective intelligence of AI models to come together, forming a digital hive mind. This decentralized approach allows for the rapid expansion and sharing of knowledge, akin to an unstoppable library of knowledge that grows exponentially. By harnessing the power of distributed networks and incentivizing collaboration, Bittensor is driving innovation and pushing the boundaries of machine learning.

The Bittensor protocol establishes a marketplace that transforms machine intelligence into a tradable commodity. By creating an open and accessible network, it fosters innovation from a diverse global community of developers.

TAO holds intrinsic value within the Bittensor ecosystem as it represents the embodiment of intelligence and knowledge. Each TAO token signifies the contributions made by developers and the quality of their models. The value of TAO extends beyond a mere currency, as it serves as a representation of the collective intelligence and insights contained within the network.

How does the Bittensor Protocol work?

The Bittensor Protocol is a decentralized machine learning protocol that enables the exchange of machine learning capabilities and predictions among participants in a network. It facilitates the sharing and collaboration of machine learning models and services in a peer-to-peer manner.

Here is an overview of how the Bittensor Protocol works:

Network Architecture

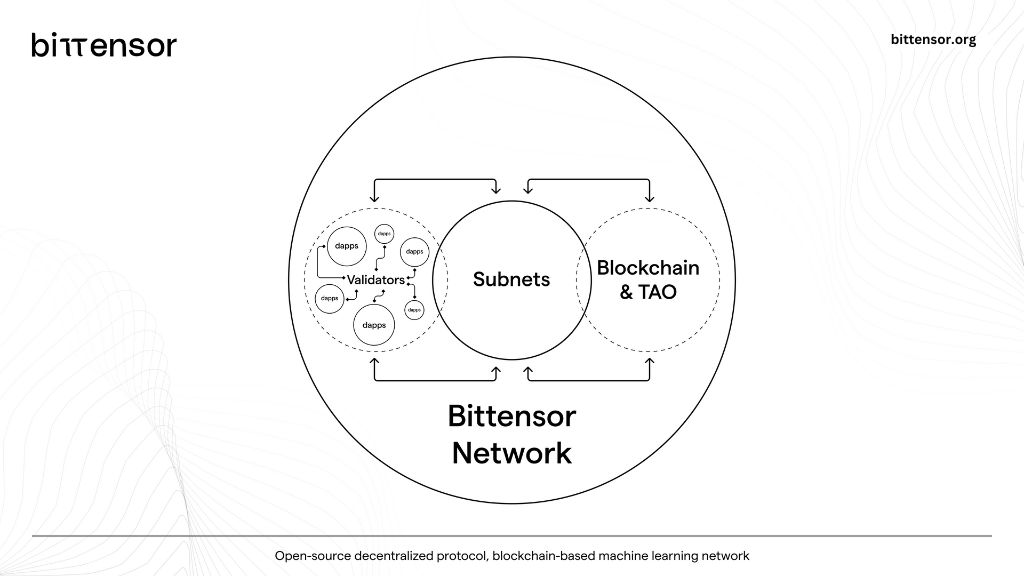

The Bittensor network consists of a set of nodes (miners) that participate in the protocol. Each node runs a Bittensor client software that enables it to interact with other nodes in the network.

Registration

The Bittensor Protocol operates through a registration process that involves the registration of a hotkey. To participate in the Bittensor network and mine Tao tokens, users are required to register a hotkey by either solving a proof of work (POW) or paying a fee using the recycle_register method.

Subtensor

Once registered, a node becomes part of a subnet, which is a specific domain or topic within the Bittensor network. Each subnet has its own set of registered nodes and associated machine learning models.

Validators

Validators play a crucial role in the Bittensor network. They validate the responses and predictions provided by the miners. Validators ensure the integrity and quality of the data and models being exchanged within the network. Validators query miners and evaluate their responses to determine the accuracy and reliability of their predictions.

Validators serve as crucial intermediaries and access points to the Bittensor network, playing a vital role in enabling interaction and providing an interface for users and applications.

Mining

Miners in the Bittensor network provide machine learning services by hosting and serving their locally hosted machine learning models. When a client application requires a prediction, it sends a request to the Bittensor network, which routes the request to a miner that has registered itself as a provider for the required service. The miner processes the request using its locally hosted machine learning model and returns the prediction back to the client via the Bittensor network.

Consensus

The Bittensor network uses consensus algorithms to reach an agreement on the state of the network and ensure the integrity of the data being processed. Consensus mechanisms help prevent double-spending, ensure data consistency, and maintain the overall security of the network.

Incentive

The Bittensor network incentivizes participation and contributions through a token-based economy. Miners and validators are rewarded with tokens for their computational resources, accurate predictions, and other valuable contributions to the network. These incentives encourage active participation and help maintain the network’s stability and efficiency.

One of the most remarkable features of the Bittensor protocol is its incentivization mechanism. It rewards users who contribute valuable data or computational resources with TAO tokens.

What is the Consensus Mechanism of TAO Bittensor?

The consensus mechanism is designed to reward valuable nodes in the network. This incentivization mechanism incorporates game-theoretic scoring methods, including the application of Shapley Value, to assess the performance and reliability of models within the Bittensor network. The Shapley Value is a concept from cooperative game theory that assigns a value to each model based on its marginal contribution to the overall prediction accuracy and collective intelligence of the network.

In the context of Bittensor, the Shapley Value is used to determine the contribution of each model in achieving consensus and making accurate predictions. It takes into account the collaborative nature of the network, where models interact and exchange information to improve their collective performance. The Shapley Value captures the importance of each model by evaluating how much it enhances the accuracy and insights of the overall model ensemble.

During the scoring process, models are evaluated based on their individual predictive capabilities, their ability to provide valuable insights, and their alignment with the consensus reached by other models.

What is Proof of Intelligence?

Proof of Intelligence is a consensus mechanism used in the Bittensor network to reward nodes that contribute valuable machine-learning models and outputs to the network. It is a variation of the Proof of Work (PoW) and Proof of Stake (PoS) mechanisms used in blockchain networks, but instead of solving complex mathematical problems, nodes are required to perform machine learning tasks to demonstrate their intelligence. The more accurate and valuable the output of a node’s machine learning model, the higher the chance of being selected to add a new block to the chain and receiving rewards in the form of TAO tokens.

Decentralized Mixture of Experts (MoE)

In a decentralized mixture of experts (MoE) model, Bittensor utilizes the power of multiple neural networks to address complex problems. Each expert model specializes in a specific aspect of the data, and when new data is introduced, these experts collaborate to generate a collective prediction that surpasses the capabilities of any individual expert.

The primary objective of employing this approach is to tackle challenges that were previously beyond the scope of traditional centralized models. By harnessing the collective power of multiple expert models, Bittensor achieves higher prediction accuracy and can handle larger volumes of data. This method enables the network to provide more precise and comprehensive responses compared to relying on a single model.

For example, imagine a request for a Python code with Spanish comments for explanation. If only one model were responsible for this task, the response might be unsatisfactory. However, with the MoE approach, the strengths of each model in the network are leveraged, while the weaknesses of one model are compensated by another. In this case, model A is proficient in Spanish and model B excels in programming. Through the fusion of their respective strengths, the response received will contain accurate Spanish comments and well-written code, delivering a comprehensive solution.

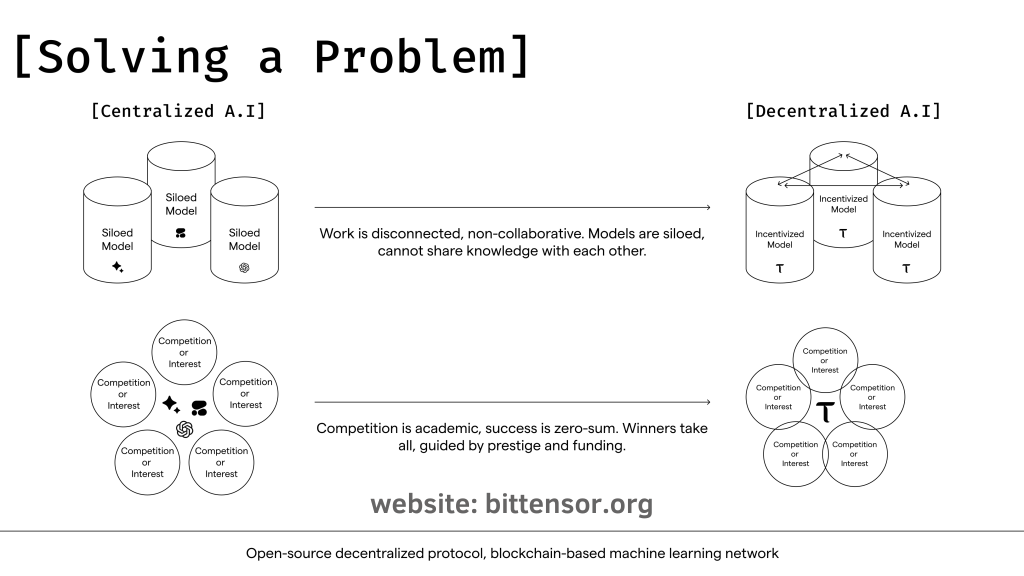

Which problem does Bittensor want to solve?

Training AI models require vast amounts of data and computing power, which is mostly accessible to large corporations and research institutions due to its high cost. This concentration has led to permissioned and siloed AI models, limiting collaboration and hindering compounding effects in AI development. Siloed models cannot learn from each other, and third-party integrations require permission, leading to limited functionality and value in the AI ecosystem.

Two main challenges hinder AI development to scale: the compute bottleneck and inefficiencies in algorithmic innovation.

The compute bottleneck in AI development refers to the significant computational power required to train large-scale AI models effectively. As AI models become more complex and data-intensive, the demand for computing resources increases exponentially. This poses a challenge for AI companies as they need to invest in expensive hardware or rely on cloud providers, which can lead to high costs, especially for smaller companies or startups.

On the other hand, inefficiencies in algorithmic innovation refer to the limitations in building upon existing AI models. When researchers develop new models, they often start from scratch, losing the intelligence or patterns learned by previous models. This lack of knowledge transfer hinders the progress of AI as a field and can lead to redundant efforts and wasted resources.

What is the Goal of Bittensor?

Bittensor aims to create a peer-to-peer marketplace that incentivizes the production of machine intelligence. Through cutting-edge techniques like mixture of experts and knowledge distillation, the platform establishes a collaborative network where knowledge producers can sell their work, and consumers can purchase this knowledge to enhance their own AI models. By fostering a “cognitive economy,” Bittensor seeks to facilitate knowledge sharing among researchers, encouraging the development of more powerful AI models. The ultimate goal is to open new frontiers in the advancement of AI by aggregating individual contributions of knowledge. As computers contribute their AI models and training to the Bittensor network, they are rewarded with Tao.

Ultimately, the vision is to create a pure market for artificial intelligence that is fair, transparent, and accessible to everyone.

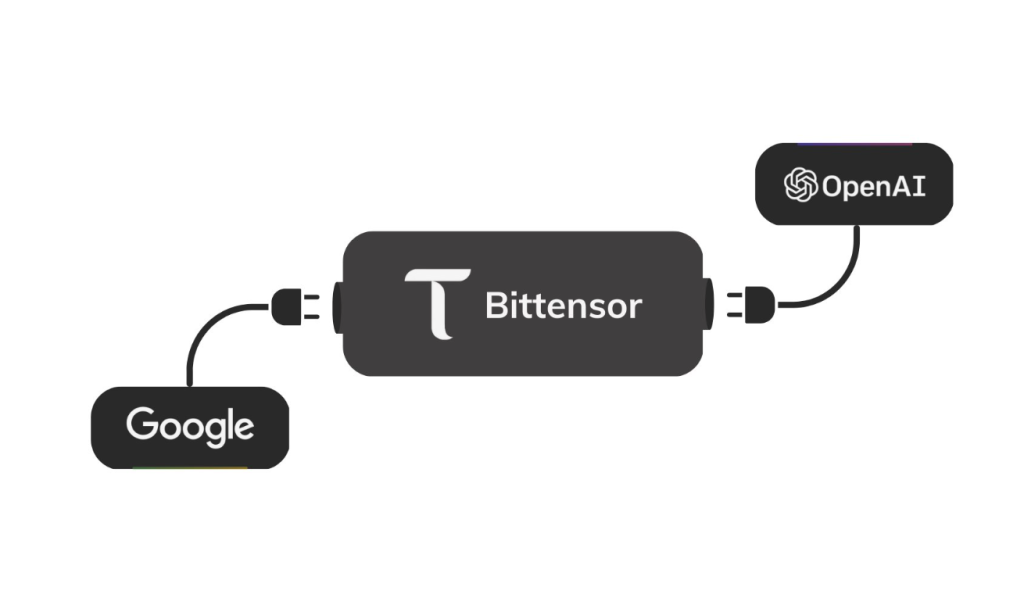

Big companies like IBM, Google or Microsoft, but also smaller ones, will pay TAO to use the various models inside the Bittensor network for their own projects.

The reason why big companies like IBM, Google, or Microsoft, as well as smaller ones, would pay TAO to use the various models inside the Bittensor network for their own projects, is because the centralized AI industry is highly ineffective. For instance, Google alone spends 75% of its electrical cost only on machine learning today. As AI research compounds itself every year and new models have to relearn what previous models already learned, these companies will likely find it advantageous to use the most effective and largest neural network in the world provided by Bittensor as an addition to their own machine learning networks.

Bittensor Network Structure

Bittensor’s architectural design draws inspiration from the cognitive processes observed in the human brain. Each computational unit in the Bittensor system, referred to as a “Neuron,” comprises three essential elements: a model, a dataset, and a loss function. These Neurons operate collaboratively, aiming to optimize their respective loss functions by leveraging input data and inter-neuronal communication.

The communication mechanism between two Bittensor Neurons mirrors the neural connections observed in biological neural networks. Each Neuron possesses an Axon terminal to receive inputs from other Neurons and a Dendrite terminal to transmit inputs to neighboring Neurons.

In the training process, a Bittensor Neuron performs dual tasks: it sends a batch of inputs from its dataset to neighboring Neurons and simultaneously processes the same batch through its internal model. Upon receipt, the neighboring Neurons process the input using their respective local models and return the output to the original Neuron. The original Neuron then consolidates these outputs and updates its gradients by considering both the loss incurred by the remote Neurons and its own local loss. This cooperative learning process fosters an intelligent and interconnected network of Neurons, exhibiting parallels to the cognitive processes observed in biological neural networks.

What is the total supply of Bittensor?

The total supply of Bittensor (TAO) is 21 million tokens, which is the same as the total supply of Bitcoin. This fixed total supply is designed to limit inflation and maintain the scarcity and value of the token over time.

The distribution of TAO tokens is gradually released over time through mining rewards, staking rewards, and community-driven initiatives. The tokens are used for governance, staking, and as a means of payment for accessing AI services and applications built on the Bittensor TAO network.

Does TAO have its own Wallet?

Bittensor’s TAO token does not have its own dedicated wallet, but it can be stored and managed in compatible wallets that support the Polkadot ecosystem. The Polkadot JS Wallet is one such wallet that supports the TAO token, and it is a popular choice among users due to its user-friendly interface and range of features.

Once the TAO token has been added to the wallet, users can then send and receive TAO tokens, view their transaction history, and manage other aspects of their TAO holdings directly from their wallet.

After setting up their wallet and adding the TAO token, users can receive TAO tokens to their wallet address and check their TAO balance by using the Bittensor blockchain explorer at explorer.finney.opentensor.ai. They can also send and receive TAO tokens, as well as manage other aspects of their TAO holdings directly from their wallet.

Should I move TAO from the Polkadot JS wallet to the Bittensor web wallet?

To clarify, when you set up a Polkadot JS wallet or Bittensor wallet, the TAO tokens are not actually “stored” in the wallet itself. Instead, the wallet generates a public key and a private key on the blockchain, and the private key gives the wallet owner the ability to control and send TAO tokens on the chain.

This means that when you buy TAO and send them to your wallet’s public address, the tokens are actually stored on the chain, and the wallet simply provides a means for you to access and control them using your private key.

Therefore, there is no need to transfer TAO tokens from a Polkadot JS wallet to a Bittensor wallet, as both wallets generate public and private keys on the same chain. It’s similar to creating two public addresses through a wallet and moving TAO from one address to the other.

It’s important to note that this concept applies to all coins and tokens on any blockchain. Wallets simply provide a user-friendly interface for accessing and controlling tokens using private keys.

Where can I buy TAO?

Here are some exchanges & platforms where you can buy TAO:

Investors of Bittensor

Here you can find the investors of TAO-Bittensor.

- Digital Currency Group

- Polychain Capital

- FirstMark Capital

- GSR

Bittensor Team

There is a pseudonym called “Yuma Rao” which is also mentioned in Bittensor’s white paper, just like in Bitcoin Satoshi Nakamoto. It is not known if this person really exists and we may never know more about him or her. However, there are people who are behind the Bittensor Foundation and these people are also in the public eye. There are many people working for the foundation and some of them are ex-Google employees or researchers.

- Jacob Robert Steeves (Co-Founder)

- Ala Shaabana (Co-Founder)

Conclusion

The beauty of our system is its diversity. Any engineer or group of engineers can fine-tune a model, plug it in, and play the odds. The more diversity we can pull into the system, the higher the probability of inducing state-of-the-art results. Our system is scalable, and software can be built on top of it, meaning there is no limit to the quantity and complexity of innovation it could ultimately foster.

By creating an interactive, open-ended ecosystem for the development of artificial intelligence, we are not only harnessing a global supply of computing power, but we are also tapping into a global supply of innovation. This approach has the potential to drive progress in artificial intelligence and lead to groundbreaking results.

We invite researchers and engineers to join us on this journey to create a new paradigm for AI, one that leverages digital trust to create a market for pure machine intelligence. Together, we can build a more powerful and intelligent future.